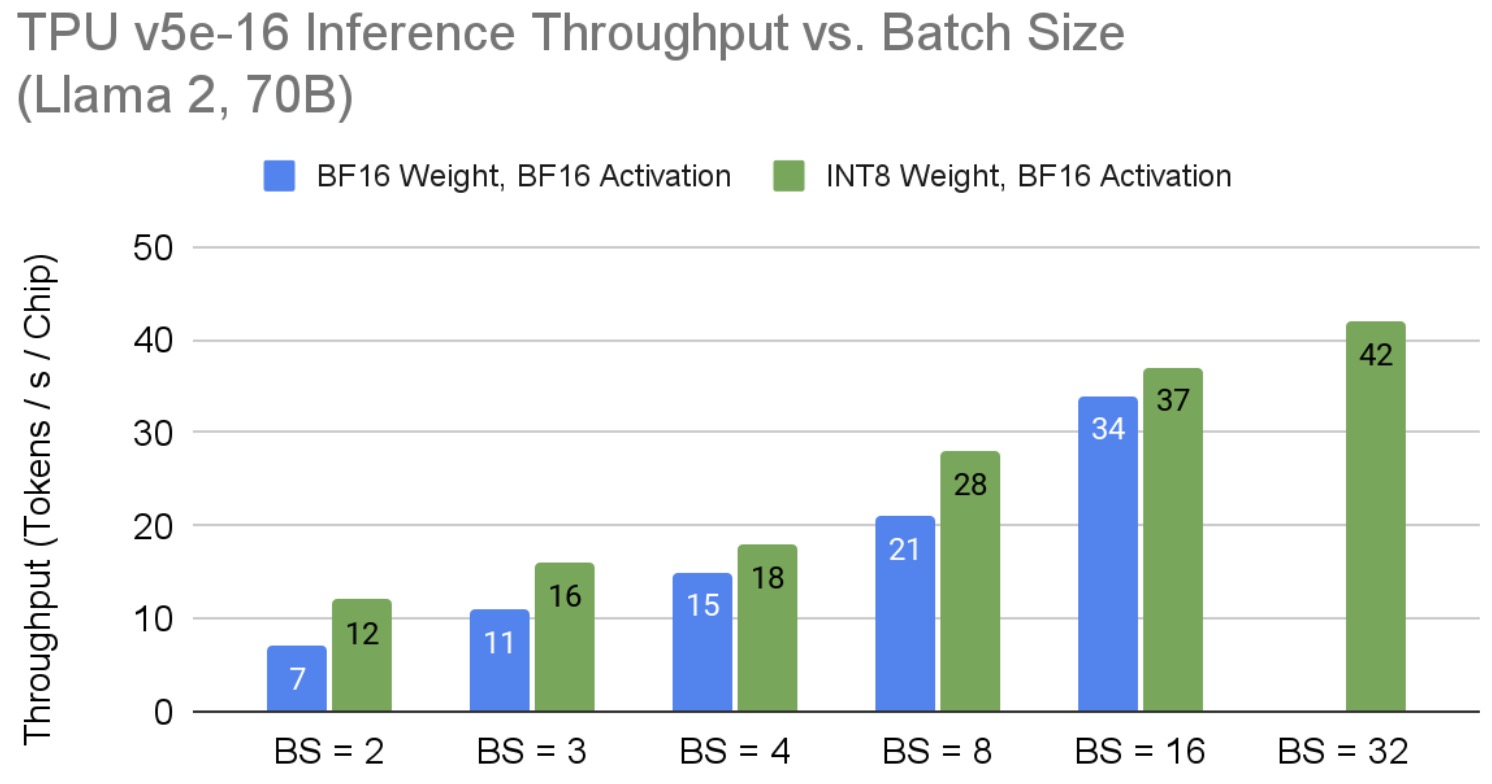

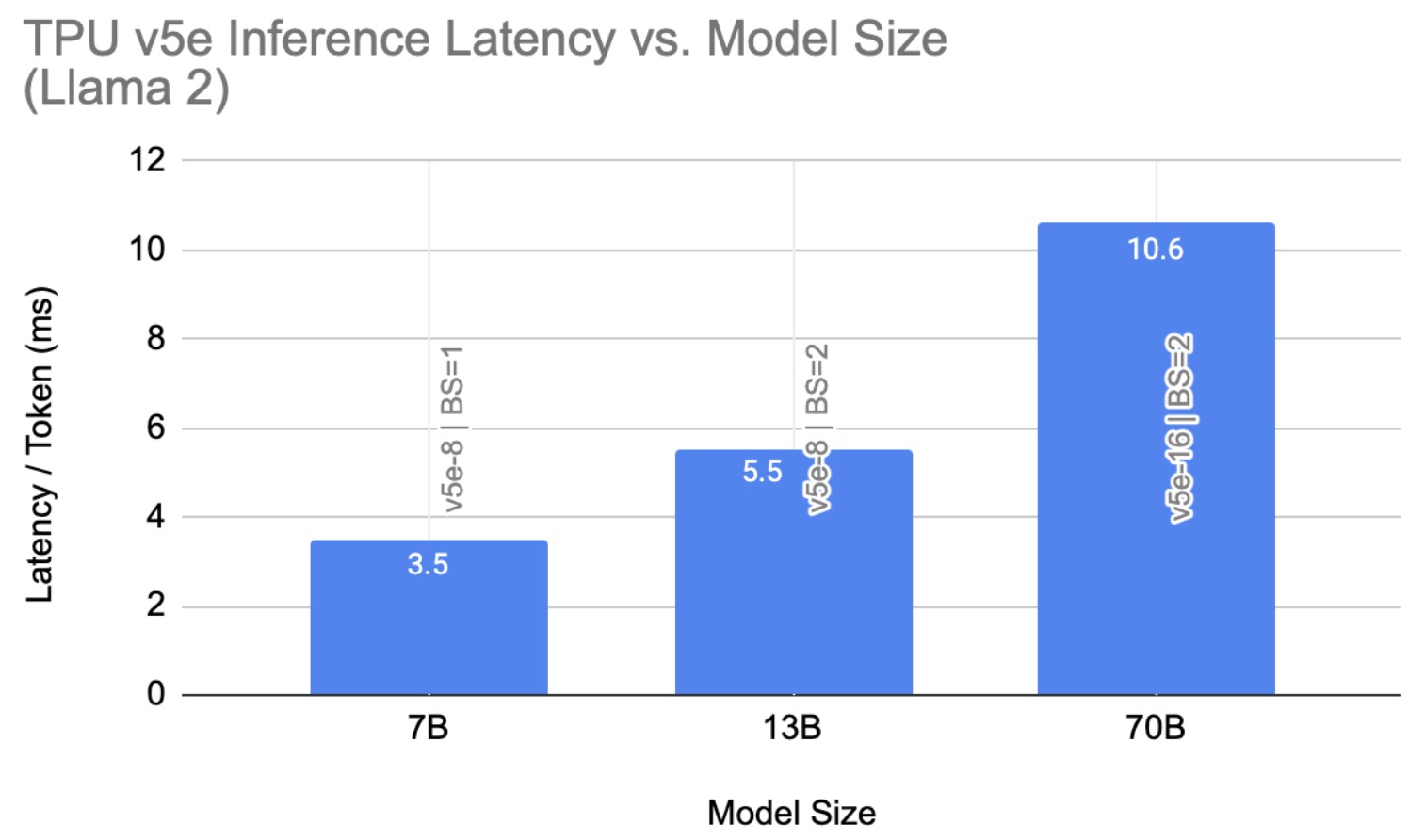

How Cloud TPU v5e accelerates large-scale AI inference

Sabastian Mugazambi on LinkedIn: Cloud TPU v5e is generally available

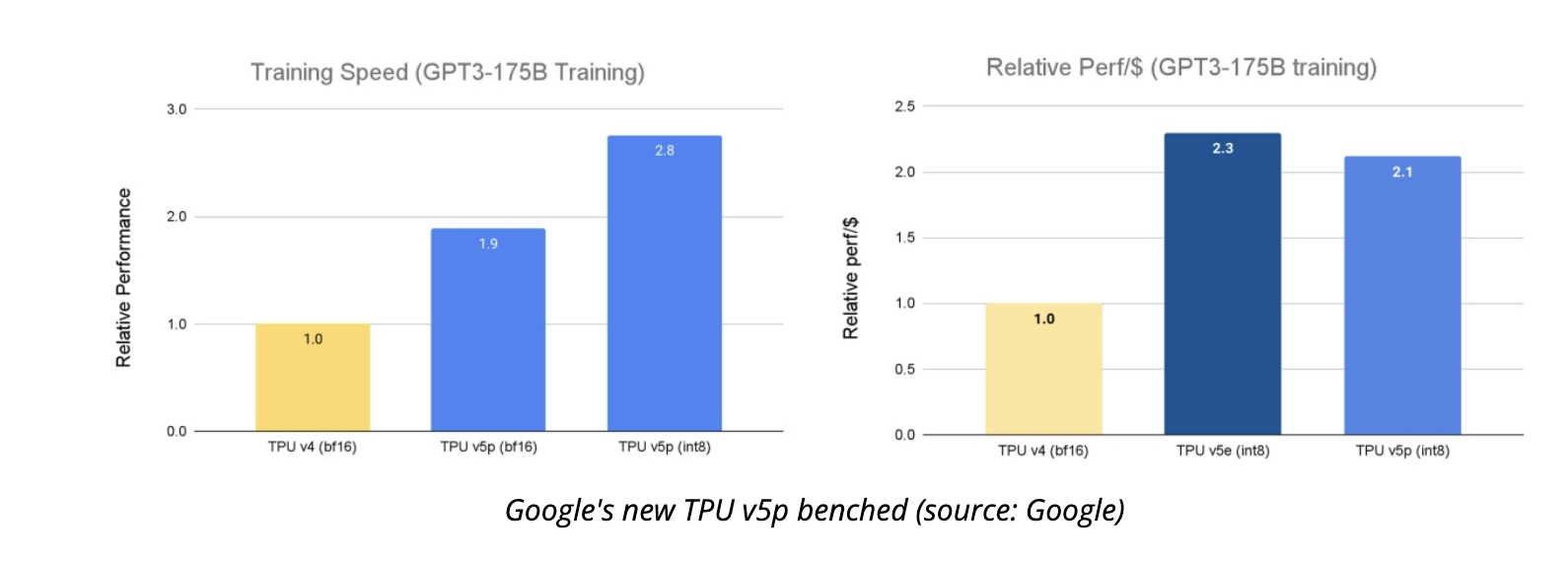

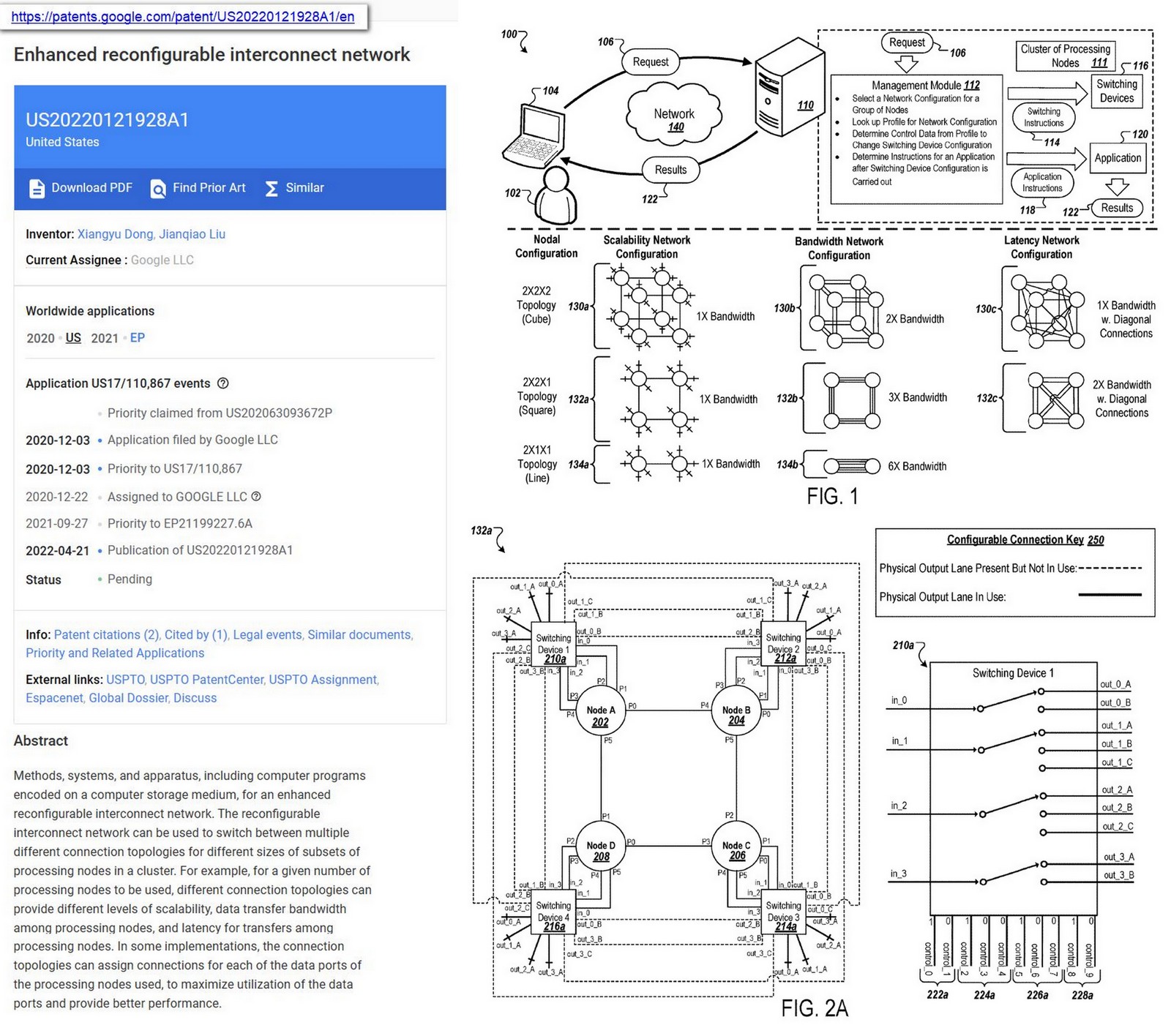

AI HyperComputer + Cloud TPU v5p : A Robust Adaptable AI Accelerator

OGAWA, Tadashi on X: => Cloud TPU v5e accelerates large-scale AI inference, Sep 1, 2023 enables high-performance and cost-effective inference for a broad range AI workloads, up to 1.7x lower latency

High-Performance Llama 2 Training and Inference with PyTorch/XLA on Cloud TPUs

OGAWA, Tadashi on X: => Cloud TPU v5e accelerates large-scale AI inference, Sep 1, 2023 enables high-performance and cost-effective inference for a broad range AI workloads, up to 1.7x lower latency

Alexander Erfurt on LinkedIn: How Cloud TPU v5e accelerates large-scale AI inference

Google Cloud Next everything announced: Infusing generative AI everywhere

Google Introduces 'Hypercomputer' to Its AI Infrastructure

Google unveils TPU v5p pods to accelerate AI training • The Register

High-Performance Llama 2 Training and Inference with PyTorch/XLA on Cloud TPUs

Google Cloud Platform Technology Nuggets — Sep 1–15, 2023 Edition, by Romin Irani, Google Cloud - Community

.png)